Jimmystats: A Crutin Consensus Take 2

So please throw out the old one; I've re-jimmied my historical recruiting spreadsheets to create a new formula for reporting a consensus star rating, kind of like 247's composite rating except it's not just 247 who has one now.

What I mean by "STARs" We are all familiar (I hope) with the idea of a 5-star player and a 4-star player, etc. For a long time on this site we've also been talking about "consensus" 5-stars, versus maybe "4.5-stars" who were maybe 5-stars to half the scouting sites and 4-stars to the other half. Thing is that's not very useful either; a guy whom every site ranked 1 spot below the last 5-star and a guy whom every site ranked just above the first 3-star are both "consensus 4-stars" but should have very different expectations.

Besides the sites really use their own scoring systems, having a certain score equal star ratings just for the sake of comparison. Or even if they don't (Scout) they rank players against each other. That's a lot of data they're trying to tell us about our recruits, but difficult to access. Why can't we have just one number on a simple scale that says all that?

Oh right we do: the 247 composite. Well why can't we have another?

The idea was to take all the different recruiting scoring systems and have them fit a simple star rating system. My previous attempt had some problems, mostly with ESPN not syncing up with the others. So here's the new attempt:

| STARs | Rivals | Scout | ESPN | 247 |

|---|---|---|---|---|

| ★★★★★ | 6.1 (Top 10) | Top 10 | 91+ | 99+ |

| 4.80 | 6.1 | Top 25 | 88-90 | 98 |

| 4.60 | 6.0 | Top 50 | 85-87 | 96-97 |

| 4.40 | 5.9 (t100) | Top 100 | 84 | 95 |

| 4.20 | 5.9 | Top 150 | 82-83 | 94 |

| ★★★★ | 5.8 (t250) | Top 250 | 81-82 | 92-93 |

| 3.80 | 5.8 | UNR 4-star | 80 | 90-91 |

| 3.60 | 5.7 | 3-star | 78-79 | 88-89 |

| 3.40 | 5.6 | " | 77 | 85-87 |

| 3.20 | 5.5 | " | 73-76 | 82-84 |

| ★★★ | 5.4 | " | 70-72 | 80-81 |

| 2.75 | 5.3 | 2-star | 67-69 | 77-79 |

| 2.50 | 5.2 | " | 64-66 | 74-76 |

| 2.25 | 5.1 | " | 62-63 | 72-73 |

| ★★ | 5.0 | " | 60-61 | 70-71 |

| 1.75 | 4.9 | " | 59 | 69 |

The one potentially confusing thing is "3.80" and not "4.00" is the baseline of a low-ish 4-star. Ditto "2.75" for a low-ish 3-star. I did that because the sites have up to 300 guys who get a 4-star ranking, and also have Top ~250 or 300 lists. Since what we think of as a "4-star" is usually the kind of guy who makes that list, I wanted the numbers to reflect it.

[after the jump: how I did it, and free spreadsheets yay!]

Converting Rivals: The Rivals database goes back to 2002 but until 2004 that was just on a 5-star scale. For the 2004 class they introduced the Rivals Rating system. I don't know why they came up with such weird numbers but a 6.1 was a 5-star, the 4-stars were broken into 6.0 to 5.8, 3-stars were 5.7 to 5.3, and 2-stars were 5.0 to 5.2. In a typical year there will be about 30 to 35 guys who get a 6.1, another 30-40 who get a 6.0, the rest of the Top 100-150 would usually be 5.9, and then the 5.8s would go past the Rivals 250 to hit about 220-250 players total. Ditto the 5.7s and 5.6s, when my data start tailing off since I only pulled the guys who made it into position rankings (plus M recruits).

So everything from a 3-star down worked as a good baseline for star ratings, but I had to break up the 6.1s, 5.9s, and 5.8s to match some of the detail that you get from the other sites in the 4- and 5-star ranges. That was simple enough using the Rivals250: a 6.1 got the full 5 stars if he made the top 10, and otherwise got a 4.80. The 5.9s were split after Rivals 100, and the 5.8s were split at the end of the Rivals 250.

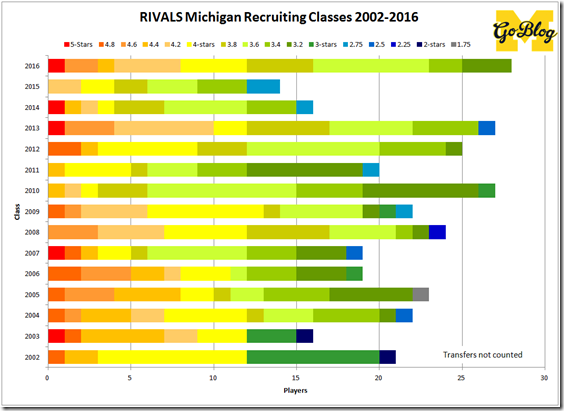

M's classes end up looking like this:

Converting Scout: The one site that doesn't have a scoring system beyond 5 star levels was the most difficult to break down more precisely. I had to use position rankings; I pulled a bunch of historical ones from Rivals and then averaged out what the STAR ratings would be from that. So for example Raymon Taylor was the 49th CB to Scout. In 26 years of rankings the #49 cornerback has been a "5.7" four times, a "5.6" 15 times, and six times was a "5.5." So it's a good bet on the Rivals scale that Taylor would be a 5.6, or a 3.40-star.)

The old stereotypes of the sites were driven home pretty strongly when I started getting into this: ESPN's rankings beyond the top 150 or so start to make progressively less sense: they seem to fire-and-forget with a lot of dudes, and give bumps to the players who participate in their All-American games. Rivals also gets kind of sloppy after their 250; their regional evaluators are not all created equal. Just from certain rating disparities repeated across classes I've developed a pet theory that whoever covers Mississippi-Alabama-Arkansas falls in love with every player he ever sees, whereas whoever's in charge of the Midwest believes the last "real" football player was Red Grange. Unless Michigan or Ohio State signs him; then he's a 3-star. 247 is hard to get a read on—they are more reactive to late things, which probably will make them better at predictions, but they've only been scouting full classes since 2012. They're also way deeper; the other sites rank to at most 300 while 247 keeps going into the 1,000s.

Scout, meanwhile, is meticulous in ranking players against other players. They rank players in states, rank them by position, rank them by position in their states, rank them by position in their regions, and rank them in smaller position groups except, thankfully, DEs.

I prefer larger group rankings for two reasons: a larger pool means there's more players to triangulate from, and players grow into new positions so regularly that unnecessary chops just increase the likelihood of rating a guy at a position he won't play. "All-purpose back" maybe meant something in an era of dedicated West Coast passing out of a pro set, but most teams today are either shotgun, single-back or I-back, meaning all RBs are "all-purpose." Ranking centers is jut a head-slap.

| Level | Pre-'13 | Since '13 |

|---|---|---|

| 5-star | 85-100 | 90-100 |

| 4-star | 80-84 | 80-89 |

| 3-star | 75-79 | 70-79 |

| 2-star | 68-74 | 60-69 |

| 1-star | 55-67 | 50-59 |

Converting ESPN:

ESPN actually proved the most difficult, since they tend to pack some ratings and then have very few at the next number. And their ratings aren't even on the same scale as each other. First off they switched to a new rating system after 2012. Also in their first few years they didn't distribute those ratings the same as later—players with a "79" (high 3-star) in 2007 almost cracked the top 100.

They have a fuller explanation of their ratings here, but at right you can see how that looks in table form.

Since they're not apples and apples, I either had to square them off or find some way to get the ratings to match. On my side is they've been putting out rankings of their top ~200 players since 2006 (they went to 300 in 2006). As I stretched and compressed, I could use their national rankings to approximate divisions between the ratings. Pictorially speaking, I had to make the blue curve match the red curve below.

For the 5-stars I compressed every other ranking point, so like old 98 (NTO98) and 99 are now a 99, old 97 is now 98, old 95 and 96 are the new 97, and on down until 85(o)=90(n). Expanding the 4-stars was more difficult. Simply rolling down the line didn't match the curve of other years until I played around a whole bunch. Ultimately an 84(o)=88 unless he was in the Top 15, which then he gets an 89. 83(o)=87; 82(o)=86 if he cracks the top 50 and 85 if he doesn't; and 80(o) was split up between 83 (top 150), 82 (top 200), 81 (top 330) and 80 (not ranked). It wasn't perfect, especially since in 2007 they were giving 79s to top-150 players, but this got me pretty near a match:

The shift affected guys like Donovan Warren, Kyle Kalis and Joe Bolden, who were 80s in their years but would be more like 83s in the current system.

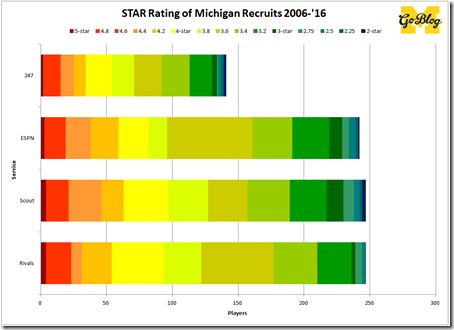

So now I have ESPN ratings going back to 2006 that all mean more or less the same thing. But getting it to match up with the rankings of the other three sites was challenging. It took a lot of fiddling around until it even passed the eye test, and I split up the ratings into more categories in the process. When I went to compare all of this, well, ESPN still stuck out. Look at that blob of 3.6s:

Those are guys who got a 78 or a 79 to ESPN, i.e. a high-3-star. However when I looked at the list of those guys, it wasn't a matching things problem; it was that ESPN really just had a lot of otherwise 4-stars rated much lower than the other sites:

| Name | ESPN | ESPN Rk | Rivals | Scout | 247 |

|---|---|---|---|---|---|

| William Campbell | 79 | 22nd OT | 5th DT | 6th DT | n/a |

| Boubacar Cissoko | 78 | 24th CB | 4th CB | 3rd CB | n/a |

| Erik Magnuson | 79 | 27th OT | 10th OT | 15th OT | 6th OT |

| Toney Clemons | 78 | 43rd WR | 12th WR | 10th WR | n/a |

| Cullen Christian | 79 | 18th CB | 8th CB | 3rd CB | 10th CB |

| Justice Hayes | 79 | 22nd RB | 3rd APB | 14th RB | 6th APB |

| Ryan Van Bergen | 78 | 13th TE | 18th SDE | 8th DE | n/a |

| Brennen Beyer | 79 | 24th DE | 16th SDE | 12th DE | 11th OLB |

| Tom Strobel | 78 | 61st DE | 16th WDE | 12th DE | 14th WDE |

| Sam McGuffie | 79 | 30th RB | 10th RB | 7th RB | n/a |

| Michael Shaw | 78 | 59th RB | 7th RB | 29th RB | n/a |

| Amara Darboh | 78 | 82nd WR | 30th WR | 32nd WR | 26th WR |

| Josh Furman | 78 | 38th OLB | 38th ATH | 7th S | n/a |

| Greg Mathews | 78 | 33rd WR | 8th WR | 39 (WR) | n/a |

Converting 247. Their 100(+) rating system is kind of like a teacher giving out grades, and that's natural enough to make sense of. I triangulated them last because they have the greatest range. Matching them to ESPN's was tough but I ultimately made the latter move to fit the former since converting a 100 scale to one of five grades for a product of American public schools is second nature.

While 247 does go over 100 on occasion, that is a rare enough occurrence for 99 and above to register as the annual group of 5-stars.

I've posted the new values on that roster spreadsheet I keep. I'll embed again but if you want to see it in all its glory, take the link.

Big changes from old system: Mark Huyge, Jack Miller, Patrick Omameh, JT Floyd, Alex Mitchell, Chris Richards, Christian Pace, Mike Williams, Courtney Avery, Justin Boren, Russ Bellomy, and Troy Woolfolk all jumped at least 3/10ths of a star. For the centers in there that's because I got more center rankings. The rest were due to significant differences in ESPN rating.

So now we've got this. I expect I'll be playing around with it in the future.

February 9th, 2016 at 4:27 PM ^

To make ESPN even more difficult they also have 4-star 79s and 3-star 79s. Mags is an example.

EDIT: Beyer, Fitz, Hayes, Hollowell, Jeremy Jackson and Marvin Robinson were too.

February 10th, 2016 at 1:19 PM ^

On Seth's graph of where they rated M guys lower than the other services, they were right just about every time. Hmm

February 10th, 2016 at 10:12 AM ^

February 10th, 2016 at 10:24 AM ^

With so much info, surely I can come up with something insightful to add or comment on...

//stares out window

:(

February 10th, 2016 at 10:26 AM ^

you can tell Seth never had to deal with Common Core math.

Well done man...well done!

February 10th, 2016 at 10:40 AM ^

I don't understand the last graph. How do you get the available starts? What is that reflecting? I'm confused on how to read it. I understand the bar part of course.

February 10th, 2016 at 11:30 AM ^

My guess is that it means we need 5 stars and 2.75 stars and avoid 3 stars as they are less likely to start.

Also are specialists considered real people? Because they typically get low rankings despite high likelihood to start.

February 10th, 2016 at 11:20 AM ^

I enjoyed this post.

February 10th, 2016 at 11:42 AM ^

I'm still curious if it ends up having much value. As you point out, the sites are inconsistent in how well they even try to rank players. I also wonder what is the best way to treat recruits who have one big negative outlier, or in the case of low rated recruits, one big positive outlier. I would think the outlier's ranking is less valuable if old, more valuable if recent. I suspect that the 247 composite formula tends to overweight outliers.

February 10th, 2016 at 12:00 PM ^

I believe the 4 & 5 star players develop at a younger age than many of the 2 & 3 star players. In my case, I developed later, my penis length alone grew one full inch between high school and college.

February 10th, 2016 at 11:56 AM ^

For some odd reason the only thing that comes to mind: I should probably be working instead.

Seth obviously is. Great stuff man.

February 10th, 2016 at 12:05 PM ^

I'm intrigued by the higher than expected percent of available starts for the 2.75 stars. I think that speaks to the "sleepers" that Michigan evaluates and recruits that the recruiting sites aren't aware of. I'm thinking of players like Jeremy Clark, Willie Henry, et al.

I'm also curious about the dips at 3.4 and 4.4. Not sure if there's anything to take away from there, other than that there's athletes (Vladimir Emilien for example) that get rated highly early, but don't get dropped when they fail to improve/perform.

February 10th, 2016 at 7:43 PM ^

Well look:

| Player | Pos | Class | Starts |

| Brady Pallante | DT | 2014 | 0 |

| Quintin Woods | SDE | 2006 | 0 |

| Renaldo Sagesse | DT | 2007 | 0 |

| John Ferrara | OG | 2006 | 6 |

| Rueben Riley | OT | 2002 | 28 |

| Marell Evans | WLB | 2007 | 1 |

| Mark Huyge | OT | 2007 | 29 |

| Ray Vinopal | FS | 2010 | 6 |

| Patrick Omameh | OG | 2008 | 42 |

| Marques Walton | DT | 2004 | 0 |

It's a really small sample size, and the contributors are three sleeper OL and a free safety who'd be redshirting if he was ever even recruited on literally any other Michigan secondary of our lifetimes.

Only Omameh among them had an NFL career. And he was a late bloomer whom Rodriguez plucked to run his system. I don't think we ever were happy with Riley, and Huyge was unkillable but never excellent.

What about the 4.4s? Small sample sizes again but there are plenty of disappointments in there. Also plenty of guys who haven't played the back ends of their careers. For example Kugler's a 0/26 to the starts/possible total, but then that's his RS freshman and sophomore years and he could earn a starting job for the next two. On the other hand: Pipkins, Justin Turner, Doug Dutch, Brett Gallimore, James McKinney... It might be bad luck, or it might be guys who were 5-stars due to early development who then slipped to some sites at the end when the senior film didn't look any better than the sophomore film.

As for the little dip at 3.4 I found the error: there are no starts listed for special teams players, and a lot of our scholarship kickers and punters were 3.4s.

Comments