stat wonk

As some noticed on the twitters I've begun putting together the stat boxes for this year's HTTV opponent previews. I figured I might as well share some of that data here in one place.

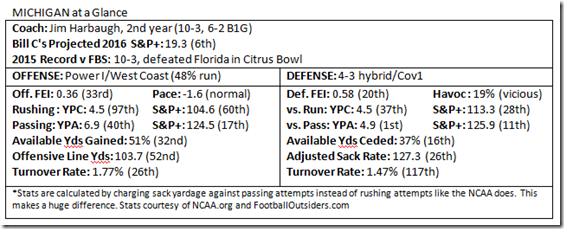

FEI and S&P+ things: Champion stats by the two resident best internet football stats guys. Brian Fremeau (@bcfremeau) of Football Outsiders, and Bill Connelly (@SBN_BillC) of that and Football Study Hall on SBNation.

Connelly is responsible for, among other stats, S&P+ ratings (for offense, defense, etc.), which are derived from play-by-play and drive data of every FBS game. S&P+ measures four of five factors that determine game outcomes: efficiency, explosiveness, field position, and finishing drives. The fifth, turnovers, is relatively random so it's left out except as extra weight on sack rates, a thing that will effect at least one weird number we'll see. Garbage time is removed, and it's all weighted by opponents.

He also puts out pre-season projections based on recruiting, returning production, and front-weighted S&P+ of the last five years. Michigan's opponents by Bill C's Projected 2016 S&P+:

| School | S&P+ | Rk/128 | Recruiting | Ret Prod | 5-yr |

|---|---|---|---|---|---|

| Michigan | 19.3 | 6th | 14th | 5th | 17th |

| Ohio State | 16.4 | 14th | 5th | 18th | 3rd |

| Michigan State | 13.5 | 22nd | 18th | 30th | 12th |

| Penn State | 11.3 | 28th | 17th | 39th | 29th |

| Wisconsin | 8.3 | 37th | 33rd | 60th | 15th |

| Iowa | 8.1 | 38th | 49th | 32nd | 48th |

| Indiana | 3.9 | 56th | 55th | 57th | 75th |

| Maryland | 2.9 | 62nd | 47th | 65th | 77th |

| Illinois | 0.4 | 76th | 67th | 76th | 73rd |

| Colorado | -2.2 | 82nd | 50th | 87th | 101st |

| Rutgers | -3.1 | 87th | 60th | 93rd | 84th |

| Central Florida | -7.0 | 99th | 57th | 113th | 70th |

| Hawaii | -13.4 | 118th | 102nd | 116th | 120th |

Not a lot of play in that schedule; the big rivals look to remain tough tests but that's it for the expected Top 25. The first two games should be good tuneups for O'Korn/whoever.

Brian Fremeau made FEI and F/+, based on opponent-adjusted drive efficiency. Clock kills and garbage time are filtered out, and strength of schedule is factored in. Since it's an overall efficiency thing I prefer to use FEI as a single-stat measure of an offense or defense, while going to the play-by-play nature of S&P+, I tend to use that and the raw, sack-adjusted* yards per play, to represent an offense and defense's run/pass splits.

Those and more after [The jump.]

* [The NCAA treats sacks as rushing, which doesn't make sense. So every year I take the NCAA's base stats and treat sacks as pass plays.This makes a huge difference. I've put them in a Google Doc if you want at 'em.]

GRIII: "I see what you did there." Sobocop: "I THOUGHT THIS GUY WAS JUST A SHOOTER"

(Bryan Fuller)

One shooting metric to rule them all.

Hi Brian,

I was reading through your post from today about the game last night (solid effort, can't wait for Saturday!) and I came across the part where you summarized Trey's statline, part of which was that he had 18 points on 11 shots. Is there a place that tracks "points-per-shot" (Kenpom maybe?), and do you think this is a worthwhile metric when tracking offensive efficiency of an individual player? I know the tempo-free stats usually look at eFG% as a major indicator of offensive prowess, but was wondering if points/shot would something akin to this for an individual player.

Thanks for your thoughts!

I just use points per shot as a quick-and-dirty evaluation method when I'm putting together a post because it gets the job done when we're running sanity checks on opinions from our eyeballs. As an out-and-out metric it falls short since it doesn't put free throws in the divisor properly—going 0-2 at the line doesn't hurt you. If you're reaching for an actual stat you can do better.

For a catch-all stat that encapsulates how many points a player acquires per shot attempt, I like True Shooting Percentage, which rolls FTAs into eFG% and spits out a number that's easy to interpret. Trey Burke is at 59%, which means that he is scoring at a rate equal to a hypothetical player who takes nothing but two-pointers and hits 59% of them. Easy.

For Michigan, there's little difference between eFG% and TS%—Burke is 175th in one, 189th in the other, etc—because they so rarely get to the line. Teams at the other end of that scale can see players with much larger differences. Iowa demonstrates this amply. Roy Devyn Marble's eFG% is 46% and his TS% is 53%—a major difference. FTA-generating machine Aaron White is around 200th in eFG% and around 100th in TS%. From an individual perspective, the latter is a more accurate picture of what happens when Aaron White tries to score.

The four factors everyone uses separate free throws from eFG%, so when you look at those as a unit you do see the impact of FTs. If you wanted to you could cram those factors down into a TS% factor and the other two factors into a Possession Advantage factor, but looking at four bar graphs seems to be okay for people.

Announcer meme overuse.

The announcers constantly having to tell us that Stauskas is more than just a shooter reminds me of last year's over used statement (story?), that Trey Burke played with Sullinger in HS. Seriously, they told us that every freaking game. So my question is, which one is worse?

I'm going to have to go with Burke. First, that was mentioned every game, whereas the Stauskas thing only gets mentioned in games where he has a take to the hole, which only happens MOST games. Second, at least the Stauskas thing is mentioned in context, as in, he just proved he was more than just a shooter which prompted the comment. The Burke/Sullinger mention was almost exclusively brought up out of the blue, and had nothing to do with anything happening in the game. It was as if the announcing team made note to make sure they mentioned it at a certain minute marker in the game because nothing plausibly could have brought it to mind otherwise.

Thoughts?

P.S. If it had kept going, Dan Dakich's mention of that thing about Spike's dad would easily have been the worst. Luckily, he only told us that Spike's dad was the former best biddy basketball player in the world during Michigan's first four games.

These are different classes of announcing crutch. The Burke thing—which is still happening—is the equivalent of Tom Zbikowski Is A Boxer, a biographical detail that will be crammed in every game to hook casual viewers. The Stauskas thing is a generally applicable sentiment that can be applied to anyone who takes a lot of threes but has decided to venture within the line.

Neither really bothers me. "Not just a shooter" means Stauskas has just thrown something down or looped in for a layup, and I am probably typing something about blouses or pancakes into twitter. I have good feelings associated with its utterance. The Burke thing is just background noise.

Divide!

Hey Brian,

So, no one is more sick of conference expansion talk as I am. I'm 100% with you that it's bent our tradition over a dumpster and I agree it's foolish to base major long-term decisions on a dying profit model.

Here's the thing though, does the fact that the current profit model is dying really matter. I mean, we're moving (slowly) to a system where you pay only for the channels you want instead of being extorted for a bunch of channels you'd never watch. So, under this new business model, although it may be less overall money than under the old system, wouldn't they still get more subscribers to be B1G network if they add more schools? There's not a single UNC fan who would pay $5 a month or whatever for the B1G network, but if they were added them, you'd get more subscribers than you would normally. I mean there's the chance that you weaken the brand that you lose more subscribers than you gain, but I don't think that's a serious concern.

TL; DR - It's about the money, and won't expansion bring more regardless of whether the old model is dying or not?

Thanks again,

Nick

Expansion brings more money but it also brings more mouths to feed. From the perspective of a school in the league it only makes sense to add a team that is at least on par with you in terms of being able to bring fans and eyeballs. Penn State and Nebraska brought those numbers; Rutgers and Maryland likely do not.

The Big Ten can expand to acquire more subscribers but in a world where cable is a niche product to enjoy live sports, the amount of money you're getting is proportional to the number of fans shelling out. Right now it's proportional to population, which makes Rutgers seem like a good idea. Later maybe not so much.

People think things that make them feel better.

To Brian:

Brian, I have this constant argument with a Spartan at work...He says that Michigan's recruiting rankings are always high because when Michigan lands a recruit, the recruit gets a bump in ranking. According to him, this is because a large number of Michigan fans pay recruiting sites for memberships so the sites keep Michigan fans happy by giving them a higher ranking than other schools with lower memberships. He also says that MSU's coaches are just better at recruiting than the sites so that is why they do better than their rankings. Any thoughts on how to prove / disprove his theory?

Thanks,

Troy

It will not matter since from the sounds of this conversation your co-worker thinks Mike Valenti is a gentleman scholar and will find some other way to wheedle himself positive feelings until such time as his team is crushed under the boot of history.

HOWEVA, you could just point out that literally every four-star member of Michigan's recruiting class fell in the most recent Rivals update except Jourdan Lewis, who hopped up sixteen spots. This is pretty much inevitable: unless you're moving up, you're moving down as more and more players are discovered. This dude will wave his face around in a disturbing fashion and ignore this data.

As for the thing about MSU's coaches, yeah, recruiting ratings are not infallible and there will be teams that deviate above and below when touted guys bust and low-rated ones break out. MSU's gotten massive outperformance from its defense recently, and maybe they can sustain that in the same way Wisconsin can sustain its running game.

They'll be trudging uphill when it comes to Michigan and Ohio State. State fans love to point out Michigan's class rankings versus their performance over the last half-decade and say "see, nothing there." Taken over larger samples, though, recruiting does correlate with success. Michigan's fade was largely a lack of retention and coaching ranging from lackadaisical to awful. If MSU fans are counting on those two items to sustain them going forward they're in for a rude surprise.

The last straw for Run of Play proprietor, Slate contributor, and Dirty Tackle blogger Brian Phillips were two articles on consecutive days citing Franklin Foer's assertion that dictatorships led to good soccer. Many of the nations that have been super good at soccer over the years have been run by dictators if you lump Vichy France in with them and think Hitler and Mussolini have anything to do with anything in the 21st century. The first problem with this piece of intellectual noodling is that the percentage of teams who have won the World Cup during or after a period of dictatorship (86%) is almost equivalent to the percentage of countries that have undergone periods of dictatorship since 1930. Twenty-five of the 32 teams in this year's edition have done so, 78%.

The second is that the statement means nothing. Phillips on the Kuper/Szymanski book Soccernomics, which endeavors to be a Freakonomics for the beautiful game:

You want to say that money is the secret behind soccer success, so you break down international games by GDP and find that, yeah, it matches up fairly well. But it doesn’t work as a theory, because China is terrible at soccer and the US is only okay at it. So you invent a variable called “tradition” and add it into the formula, which helps (now Brazil’s looking really strong), but you’re still left struggling to explain why, say, England doesn’t do better. So you add in population size, and on and on and on. Eventually, you have a delicately balanced curl of math that correctly reproduces the results of most recent matches (even if it accidentally predicts that Serbia will reach the current World Cup final). So you go to a publisher, but no one wants to buy a book about how GDP is covariable with national-team success 40% of the time, or whatever; they want a book that claims to have Uncovered the Secrets of Soccer© Using Funky Mathematical Techniques™. And so you’re led into making grand claims for the predictive power of research that really only demonstrates correlation. And there’s enough data swirling around a complex event like the World Cup that you could get the same results by collating fishing exports, number of historic churches, and percentage of authors whose names include a tilde.

You have no mechanism. Your correlation is extraordinarily weak. You have just wasted everyone's time.

The very same day, Slate (et tu!) published an article by a guy who studies a particular brain parasite claiming a correlation between soccer performance and infection rates of Toxoplasma gondii, a bacteria whose raison d'être is to get in a cat's stomach so it can make babies. An R-squared was not mentioned, but it was gestured to. Regression rules everything around me. This is why most published research results are false.

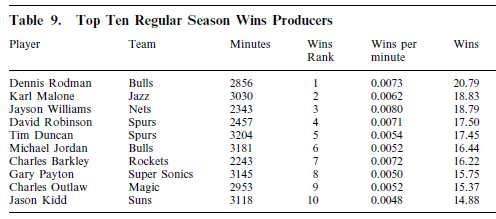

Soccer is not the only sport suffering from pseudoscience obsessed with elevating correlation above all else, mechanism be damned, and elegant curls of math that prove little other than the academic's talent for obfuscation in the name of publishing. Kuper and Syzmanski actually got to the party late. Princeton economist and Malcolm Gladwell fave-rave David Berri's been here for years, and he's packing the platonic ideal of delicately balanced curls of math that end up ludicrous on further inspection. Behold the best (and sixth-best) players of the 1999 NBA season:

the emperor's clothes are fine indeed.

Berri made a splash in the sports world when he released a transparently silly book that purported to show that Dennis Rodman was responsible for more wins than teammate Michael Jordan. This drew the ire of the basketball statistics community and anyone with a damn lick of sense. People set about showing that Berri was peddling snake-oil. I even had a go at it in one of the erratic Pistons posts that showed up around here a couple years ago, noting that after Ben Wallace left the Pistons' rebounding changed not one percent on either end of the floor. Ben Wallace got his rebounds from his teammates. (It turned out that Wallace's major skill was an ability to keep opponents off the free throw line.)

This did not take, unfortunately, and Berri has been permitted to say silly things about all sports that apparently intelligent people take seriously because he has "Princeton" next to his name. He moved on from basketball to "show" that NFL teams don't care how well their quarterbacks perform, only how high they're drafted…

Aggregate performance and draft position are statistically related. But as Rob and I argue, this is because in the NFL (like we see in the NBA) draft position is linked to playing time. And this link is independent of performance.

…that NHL goalies are indistinguishable from each other…

... there simply is little difference in the performance of most NHL goalies.

…and has returned to state basketball coaches don't understand who their best players are:

"... the allocation of minutes suggests the age profile in basketball is not well understood by NBA coaches."

Berri's at least had the common sense to stay away from baseball, where a horde of men with razor-sharp protractors wait for him to make a false move. (We will see later that collaborator JC Bradbury has not.) The statistical communities in football, basketball, and hockey are considerably more unsure of what the hell is going on in their chosen sport and are thus vulnerable to suggestion from an economist, even if it's one who seems to have never watched a sport of any variety.

The problem with all of Berri's outlandish theories is that they are wrong. Not because of old guys who peer into the soul of Andre Ethier and see a ballplayer, but because of other, more careful numbers from people who are looking for things that are true instead of things that are impressive to Malcolm Gladwell.

Quarterbacks

Berri's study actually shows that amongst quarterbacks who play a lot, draft position is not a strong factor in their performance. This is his magnificent leap:

For us to study the link between draft position and performance, we can only consider players who actually performed. It’s possible that those quarterbacks who never performed were really bad quarterbacks. But since they never played, we don’t know that (and Pinker also doesn’t know this).

Low draft picks who don't play only find the bench because of bias. A coach's decision to start one player over the other is a worthless signal. Coaches are dumb.

In reality, backup quarterbacks perform measurably worse than starters, and many different metrics show a strong correlation between draft position and performance.

Goalies

When you restrict your regressions to the top 20 goalies in terms of minutes, about half of the variation in save percentage appears repeatable. A standard deviation of talent is worth around ten goals. These days, a unit of five skaters who finished +50 at the end of the season would be heroes on the league's best team. Berri's undisclosed approach to the data set apparently takes goalies with far fewer than starter's minutes. A quick correlation run by Phil Birnbaum shows radically different r-squared values than those Berri finds just by upping the sample size. Maybe Birnbaum's numbers aren't dead-on—he doesn't use even strength save percentage, for instance—but he's not the one claiming a massive inefficiency. He's just showing that throwing a small r-squared out doesn't actually mean anything:

I don't know how the authors got .06 when my analysis shows .14 ... maybe their cutoff was lower than 1,000 minutes. Maybe there's some selection bias in my sample of top goalies only. Maybe my four seasons just happened to be not quite representative. Regardless, the fact that the r-squared varies so much with your selection criterion shows that you can't take it at face value without doing a bit of work to interpret it.

Age in the NBA

In the NBA, 23 and 24 year old players net more minutes than any other age bracket, and while the average age of an NBA minute is 26.6 this year there's a blindingly obvious explanation for this:

Berri and Schmidt think that NBA minutes peak later than 24 because coaches don't understand how players age. It seems obvious that there's a more plausible explanation -- that it's because players like Shaquille O'Neal are able to play NBA basketball at age 37, but not at age 9.

In sum: wrong, wrong, wrong, and wrong.

So what's going on here?

When you've got a hammer, everything looks like a nail. Berri's hammer is regression analysis, and he goes about hitting everything he can find with it until he finds something that seems vaguely nail-like from a certain angle. Then he proclaims a group of extremely well-paid subject matter experts dumb. When challenged about this, he says things like "regressions are nice, but not always understood by everyone." He calls the protestors dumb.

This is more than a logical fallacy: it's a worldview. In a post on a cricket study by another set of authors, Birnbaum points out the assumption built into a lot of economics studies. It, like most of Berri's work, runs a regression on some data and reports back that something fails to be statistically significant:

The authors chose the null hypothesis that the managers' adjustment of HFA [home field advantage] is zero. They then fail to reject the hypothesis.

But, what if they chose a contradictory null hypothesis -- that managers' HFA *irrationality* was zero? That is, what if the null hypothesis was that managers fully understood what HFA meant and adjusted their expectations accordingly? The authors would have included a "managers are dumb" dummy variable. The equations would have still come up with 4% for a road player and 10% for a home player -- and it would turn out that the significance of the "managers are dumb" variable would not be significant. Two different and contradictory null hypotheses, both which would be rejected by the data. The authors chose to test one, but not the other.

Basically, the test the authors chose is not powerful enough to distinguish the two hypotheses (manager dumb, manager not dumb) with statistical significance.

But if you look at the actual equation, which shows that home players are twice as likely to be dropped than road players for equal levels of underperformance -- it certainly looks like "not dumb" is a lot more likely than "dumb".

The goalie example is the most illuminating here: by adjusting the parameters of your study you can arrive at radically different conclusions. I'm not sure if Berri is intentionally skewing his results to get shiny Moneyball answers, but given how dumb his justifications are for the NFL study that's the kinder interpretation. Running around saying that we don't know that the average sixth rounder isn't John Elway waiting to happen because they can't get on the field is obtuseness that almost has to be intentional. On the other hand, he does blithely state he's "not sure there is much to clarify" about his assertion that NFL general managers are on par with stock-picking monkeys when it comes to identifying quarterbacks, so he may be that genuinely clueless. (The Lions tried a stock-picking monkey. It didn't work out.)

There's often a kernel of truth in a Berri study. When the Oilers were casting about for a goalie, smart Oilers bloggers were noting the glut of basically average goalies available and jumped off a cliff when they signed a mediocre 36-year-old to a four year, $15 million dollar deal when they could have signed two guys for something around the league minimum and expected about the same performance. That's something close to the criticism Berri levels with the volume turned way down. Hockey and football and basketball are not baseball. It is incredibly difficult to encapsulate performance in any of these sports in statistics. So when Berri makes a proclamation that NHL goalies are basically the same based on plain old save percentage—which isn't even the best metric available—he ascribes more power to a stat than it deserves and simultaneously ignores a raging debate about one of the most difficult questions in sports statistics to get a handle on.

At the very least, the questions Berri attempts to tackle with really complicated regressions are murky things best delivered with a dose of humility. Instead Berri and colleagues say there is "simply" no difference, that his research is "not understood by everyone," that a formula that declares Jeff Francouer worth 12 million a year is justifiable and that protestors are making "consistent basic errors in logic, economics and statistics" when any minor league player making the minimum could replace his production, and that David Berri went to Princeton. If he bothers to respond to what's admittedly a pretty shrill criticism, he will undoubtedly state that if only I had managed to understand his papers the many ludicrous conclusions easily disproved by competing studies (QBs, save percentage), simple facts that blow up the idea being presented (NBA minutes), or common sense (Rodman, Francouer) would have come to me in an epiphany.

These things are all ridiculously complicated and it's obvious with every response to another Berri study that declares someone dumb that different views on the data produce different results. Berri's overarching thesis is that subject matter experts make huge errors because they refuse to look at data from all possible angles. Stuck in their ruts, they robotically bang out decisions like their forefathers. Statistician, heal thyself.

There may be some social utility in distracting economists from theorizing about the economy, but there's no utility in the domain they're actually tackling.

![8431733556_454df545ec_c[1] 8431733556_454df545ec_c[1]](https://mgoblog.com/sites/mgoblog.com/files/images/Mailbag_C353/8431733556_454df545ec_c1_thumb.jpg)

16