Sagarin Ratings Rigged?

No, I don't believe Sagarin rigged his schedule ratings to help Oregon and prevent TCU from miraculously slipping by Oregon. But it is interesting to note that while I have heard plenty of talk about TCU and Boise St. lacking schedule strength, I hadn't really heard much regarding Oregon's.

Step in unnamed MGoBlogger* (**edit** named Drakeep) who pointed out that the Big Ten teams' schedules included an average of 7 winning opponents (while each SEC team faced an average of 5.8, and the PAC-10 something like 4...) This savvy blogger also pointed out that Oregon had only faced 3 teams with a winning record. I could barely believe it, and checked the stats myself. Such is true.

So I head over to Sagarin to see where exactly a schedule against 3 winning teams and a very much non-winning FCS school would rank. 20th. What was U of M's against 7 winning teams and a winning FCS school? 40th. Hmmm....

Next, I give Sagarin the benefit of the doubt and assume that although Oregon's opponents didn't all win a lot of games, the games they did win must have been meaningful. (In other words, Oregon's opponents must have combined to beat a lot of winning teams... as beating crappy teams and losing to good ones should not build a team's own strength.)

Oregon - Played 3 teams with winning records (out of 11, plus one losing FCS team.) The 12 teams Oreg played, combined to achieve 12 victories over "winning FBS opponents" and 7 victories over "winning FCS opponents." That equates to Oregon's opponents each beating ONE winning team.

Mich - Played 7 teams with winning records (out of 11, plus one winning FCS team.) The 12 teams Mich played, combined to achieve 32 victories over "winning FBS opponents" and 7 victories over "winning FCS opponents." That equates to Michigan's opponents each beating 2.67 winning teams.

These statistics are not even close, on either the primary or secondary level. Yet, there it is: Oregon's SOS at 20 and Michigan's SOS at 40.

For another reference point: Mich St. played 5 teams with a winning record, and MSU's opponents combined to haul in 19 wins against "winning FBS opponents." They lie between Michigan and Oregon on both the primary and secondary levels, and have a SOS rated 65th.

In conclusion, based on the ranking of Michigan and MSU schedules, Oregon's schedule should probably rate somewhere between 70 and 80. This has placed me in the odd position of questioning the legitimacy of Sagarin's rankings... if any mathematician out there can point out how strength of schedule might use something more meaningful and direct than opponent's wins and opponents' wins against winning teams to rank schedules, let me know. Until then, I'm going to have to believe that Sagarin is off his rocker.

*Unnamed MGoBlogger - my apologies, but I went in search of your forum and could no longer find it. If you (or anyone else) would care to link to your post, I will gladly edit the above content to include your name and a link.

December 7th, 2010 at 7:54 PM ^

It is rather embarrassing how quickly you found that... I know, I know... Google and search and all. Dammit technology, you know how to cut to the core of me!

December 7th, 2010 at 7:58 PM ^

I think you're correct. At least when you look at opponents straight up, Michigan's schedule definitely appears to be tougher, just based on wins and losses. This is especially true when you consider Michigan played three 11-1 teams to Oregon's one. Michigan's opponents were 79-53 (not including 6-5 UMass) and Oregon's opponents were 61-72 (not including 2-9 Portland State). Michigan played 3 teams currently in the top 10, Oregon played 1 (can't blame them for BEING one).

You can consider Stanford vs. any of Wisco/osu/msu a push, but Michigan's schedule appears otherwise significantly tougher than Oregon's.

I, too, would be interested to hear from math geeks postulate as to why Oregon's schedule appears so much tougher to computers like sagarin.

December 8th, 2010 at 12:20 AM ^

But is it tougher than TCUs?

December 7th, 2010 at 8:04 PM ^

December 7th, 2010 at 8:09 PM ^

That's 5 guaranteed losses for the confernce instead of 5 wins. That could have made the difference with the two teams that went 6-6 in the Pac10 and with the two that went 5-7. If all four of them got a cupcake instead of another in conference game, that's a significant boost if all you're looking at is winners or losers.

Instead, that's all some people look at and we get threads and diaries like this.

December 7th, 2010 at 8:17 PM ^

Right, because Washington St. isn't a cupcake...

December 7th, 2010 at 9:51 PM ^

Round robin means that only one team wouldn't play Wazzou if it went to the 8 teams as every one else does. That doesn't wipe out the other four losses.

And to further my argument, let's compare the Pac10 to a conference we're more familiar with. The Big Ten isn't quite on the plain as the Pac10, but I'd bet the Pac10 would compare similarly, despite more closely, with the SEC or otherwise.

Of the non-conference schedule, the Pac10 teams went 21-10. Of those ten losses, 4 came against teams in the top 10 of the final BCS standings, another came against a team in the top 15.

Four losses came from a team not in a BCS AQ conference (five if you include ND), four of those teams finished in the BCS top 15. The Big Ten played exactly 2 teams in the OOC that were ranked in the final 20 of the BCS. They lost to both.

Eight of the Pac10's wins came against BCS AQ conference teams, nine if you include Notre Dame. The Big Ten, they only had 7 if you include ND, 5 if you don't. The Pac10 faced 14 BCS AQ opponents OOC (ND 2x) for an average of 1.40 BCS AQ OOC opponents per team. The Big Ten faced 12 (ND 3x) for an average of 1.09 per team.

Including the 45 conference games (90 participants), the Pac10 participated in 103 games against BCS AQ conference teams. The Big Ten had 100 despite having an extra team to build up more chances.

The Pac10 played just 6 FCS schools, going undefeated. The Big Ten played 10 FCS schools going 9-1.

December 7th, 2010 at 10:14 PM ^

Minnesota is an AQ school. They also suck. That's who lost to an FCS school in the Big 10. You want to penalize the whole conference because Minny is not good, go for it. I don't think that makes sense, but have at it.

As for this "AQ school" non-sense, Wake Forest is an AQ school; they suck. Vanderbilt is an AQ school; they, too, suck. Kansas, Duke, Washington St; suck, suck, and hella-suck.

The Pac10 is living off of Oregon and Stanford. USC and Arizona are solid teams (Iowa level); I'll toss ORST in too since their schedule is legitimately difficult. The rest of the conference is what it is: limp.

This whole discussion takes a few generalities and spins them into, "I be like dang, the PAC10 deserves credit for playing AQ schools." Sorry, man. Go ahead and believe it if you want but I don't; Sagarin be damned.

December 7th, 2010 at 10:40 PM ^

Obviously, there's a lot of subjectivity involved (in terms of which conference is stronger), because many of these teams don't actually play each other.

I think Minnesota is actually a good example. I don't really know Sagarin, but I suspect that the Big 10 does get penalized for Minnesota losing to an FCS team - at least for all the Big 10 teams that played Minnesota. It's similar to how people were discounting Boise State's victory over Virginia Tech, because Virginia Tech subsequently lost to James Madison.

December 7th, 2010 at 10:37 PM ^

I had already seen that they lost to most of their opponents who had winning records, but wasn't aware of what the breakdown of their wins were.

What do you think about looking at the "teams that matter," i.e. teams who are above average?

A quick count reveals that 58 teams posted winning records in FBS this year; and those winning teams accumulated a combined 202 losses. U of M's opponent count of 32 wins against winning teams means U of M's opponents (who account for 8.3% of all FBS teams) handed out 15.8% of all winning FBS teams' losses. Meanwhile, Oregon's opponents (who also account for 8.3% of all FBS teams) handed out only 5.9% of all winning teams' losses. Seems pretty straightforward to me that Oregon's schedule is not vastly more difficult than U of M's.

My apologies, I really didn't mean for this to turn into some sort of PAC-10 vs BIG-10 dick sizing contest. I am only pointing out that Oregon's opponents neither posted winning records for themselves, nor beat many teams of significance.

December 7th, 2010 at 8:22 PM ^

but that still wouldn't make up for the discrepancy in Oregon's opponents beating meaningful teams (even if all intra-conference wins were replaced with wins over winning cupcakes, Oregon's schedule would still only have brought in 20 wins against winning teams vs. Michigan's schedule bringing in 32.... now you tell me the likelihood of all 8 opponents scheduling and beating a winning team.)

December 7th, 2010 at 9:12 PM ^

Not really mathematical, but off the top of my head:

1. Did you account for the fact that all of Oregon's opponents lost to Oregon? I think I remember seeing something like this when looking at stats in Madden, where winning teams have opponents with worse win-loss records, because those opponents lost to said winning team. Losing teams have opponents with better win-loss records, because those opponents beat said losing team.

2. Since each Pac 10 team apparently plays every other team (?), that's 10 teams with 9 conference games each. 45 total conference games, 45 wins, 45 losses for the conference, and only 30 non-conference games to compensate. The Big 10 has 11 teams but only plays 8 conference games. 44 conference games (?), 44 wins, 44 losses, and 44 non-conference games to compensate.

3. Combining 1&2 suggests that the impact of having a 12-0 Oregon and 11-1 Stanford in the Pac 10 will mathematically result in the rest of the conference having much worse win-loss records than having three 11-1 teams in the Big 10. Again, this is all back-of-the-envelope stuff, so I could be totally wrong.

December 7th, 2010 at 10:01 PM ^

1. Bump a loss for all of Oregon's opponents: Tennessee has a winning record outside of Oregon, and so do Washington and Ariz St (sort of; ASU pulled the no-no of playing two FCS teams... make of it what you will.) That gives Oregon 6 winning teams on its schedule. Give M's opponents their losses back and the Illini become a winning team. It is now 6 winning teams on the schedule versus 8 for Michigan (discounting UMass.) Also, the secondary trait of all of those opponents will change, but not enough to close the gap of 12 wins against meaningful teams vs 32 wins against meaningful teams.

2. Yes, the PAC-10 hands itself losses. But, there are only 4 teams in the Pac-10 with winning records; that is because they lost to a lot of out of conference foes... including nearly every winning-foe they played.

December 7th, 2010 at 10:12 PM ^

that is because they lost to a lot of out of conference foes... including nearly every winning-foe they played.

Record was 6-10 against teams >=.500 in the OOC. Losses:

#5 Wisconsin 11-1

#14 Oklahoma State 10-2

Southern Methodist 8-4

Kansas State 7-5

Notre Dame 7-5

BYU 6-6

#18 Nebraksa 10-3

#15 Nevada 12-1

#10 Boise State 11-1

#3 TCU 12-0

December 7th, 2010 at 10:17 PM ^

Oregon State gets credit for TCU and BSU, not the whole conference.

Also, losing to a good team doesn't mean that you yourself are good: see Michigan.

This is ridiculous.

December 7th, 2010 at 10:26 PM ^

I don't believe Sagarin rigged his schedule ratings

The discussion is strength of schedule and the Pac10's inflation. That's how I've read it. This isn't about how good the conference is, this is about schedule strength.

I'm not going to argue that some of the middle of the Pac10 teams are good to great because of playing a tough schedule. That's stupid, and I think we can agree on that. What we're saying is that their schedules are peppered with several good teams, and that helps their SOS.

December 7th, 2010 at 10:32 PM ^

My bad. I think others (not necessarily in this thread) take Sagarin's SOS rating and run with it as a piece of evidence for the Pac10's strength. They must be engaged regarding this dastardly opinion; 'tis my mission on the internet.

We're cool, FA. Rest your pretty little head, we're cool.

December 7th, 2010 at 10:22 PM ^

They lost to 62.5% of the winning OOC foes they played.

December 7th, 2010 at 8:38 PM ^

No its not rigged, sagarin uses a formula to make his rankings, I am willing to bet there isn't a some caveat in the formula that ranks TCU lower because "he" doesn't want TCU in the big game. The mistake I believe you are making is valuing all wins as being equal, and those who their opponents play as being equal as well.

Oregon played the 3rd, 22nd, 23rd, 24th, 31st, 34th, 42nd, 59th and 82nd ranked teams. In the pac10 every team plays the other. So for each one of those teams listed, you replace their rank with the #1 team in the country. That increases their SOS and their ranking every week its updated. Its cyclical; +/- whatever the OOC schedule does to the overall calculation

On top of that away games are worth more SOS wise than home games. Also I believe* there is an adjustment made to each win towards the teams SOS otherwise the winning team would actually be penalized in the rankings for the win because their opponent lost.

So no its not rigged, its the result of the pac10 schedule and having 2 of the top 3 teams in the country playing in the same conference.

*It also may be ignored because its negligible in the overall calculation, I don't know for certain.

December 7th, 2010 at 9:46 PM ^

Ranked 3rd, 22nd, 23rd, 24th, 31st, 34th, 42nd, 59th and 82nd according to whom? :)

I'm pretty sure using the end-result of a system is not the appropriate way to validate its components.

Secondly, I am not valuing all wins as equal. Rather, I have only investigated the number of wins against opponents who are significant: those with winning records, which means by nature I am valuing "wins" as being quite differentiable. If Tennessee beat teams who went 4-8, 1-11, 4-8, 2-10, and 6-6 (none of whom proved themselves as above average teams after playing a composite 60 games, of which 40+ are unique... or approx. 1/3 of all FBS teams) and didn't beat any teams with a winning record (other than an FCS school which went 6-5... in FCS) then I can't say that Tennessee is a relevant win. All winning teams who played Tennessee beat them, therefore Tennessee is not a strong win upon which to claim separation. Doing that for all opponents on a team's schedule allows an analysis of how many strong wins were accumulated across a large portion of teams played by the team's opponents. Granted, something like home/away could add to the strength of a win (or lessen the cost of a loss,) but even if all 12 of the big wins on Oregon's opponents' combined record were away games, I'm pretty sure that of 32 big wins on Michigan's opponents' combined record there are probably 12 away games.

A quick count reveals that 58 teams posted winning records in FBS this year; and those winning teams accumulated a combined 202 losses. U of M's opponent count of 32 wins against winning teams means U of M's opponents (who account for 8.3% of all FBS teams) handed out 15.8% of all winning FBS teams' losses. Meanwhile, Oregon's opponents (who also account for 8.3% of all FBS teams) handed out only 5.9% of all winning teams' losses.

There is a huge difference between U of M's opponents and Oregon's in terms of meaningful wins (which is a measure of success at 2 degrees of separation from Michigan.) There is also a difference at 1 degree of separation, which is to say that playing 7 winning teams is much different than playing 3 winning teams. I am positive that accounting for home/away wins will not negate that difference (unless it is weighted very, very heavy... which would be strange for a "3-pt advantage."

December 8th, 2010 at 4:40 PM ^

Ranked 3rd, 22nd, 23rd, 24th, 31st, 34th, 42nd, 59th and 82nd according to whom? :)

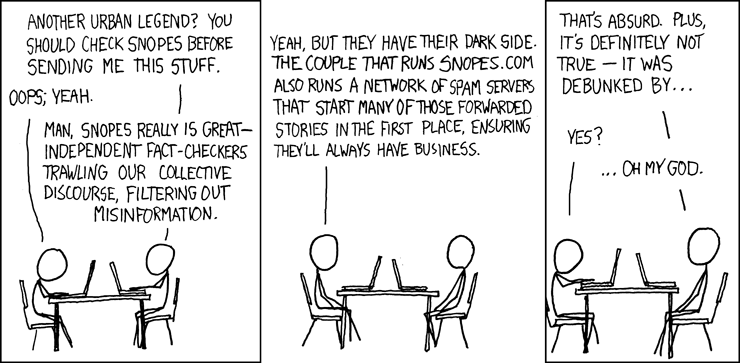

your initial sentence so reminds me of this:

December 8th, 2010 at 6:27 PM ^

You are right you didn't value "all" teams the same, but only differentiating between bowl eligible teams and not, won't provide you with a very clear answer. Handing an 11-1 stanford team its only loss on the year is worth a lot more in SOS than being the 6th team to beat ucla, or whatever team. Thats my opinion at least, his system makes sense; is it perfect, no but it's the most reputable source.

December 7th, 2010 at 9:47 PM ^

If I remember correctly, when calculating a team's SOS, Sagarin does not consider any data points involving the team in question. That means that when calculating Oregon's SOS, Sagarin removes every bit of input involving Oregon. All losses to Oregon disappear, but the effect of having played the #1 team in the country disappears too.

Oregon's SOS is very high because they beat the Stanford team that would have been #1 overall if they hadn't played Oregon and because the Pac 10 did very well in non-conference play. It should be noted that what happens between other teams in-conference is somewhat negligible in Sagarin's ratings because the effect of say Arizona State beating Arizona is that the value of a win over Arizona State increases about the same amount that the value of beating Arizona decreases. The Pac 10, meanwhile, went 21-10 in non-conference play and went 10-5 against other BCS teams, which dramatically raised the value of every Pac 10 team in Sagarin's system.

Also, it should be noted that by removing margin of victory from consideration in the BCS, the Sagarin ratings do not function well as a statistical system in general. And as non-AQ teams are typically punished in the SOS department, they really need margin of victory to be considered if they are going to be better evaluated by the computers.

December 8th, 2010 at 6:19 PM ^

Your first point make a lot of sense, it would do the same thing I was speculating about for the opponent losing.

Non-conference games wasn't even something I was factoring in to begin with, since the majority of their opponents are in conference but you are right the conference did well.

The Elo-chess is what is sent to the bcs, his, which I don't think is his, predictor does run off of margin of victory alone I believe and last time I read it, it was his most accurate predictor of what is to come. The rankings are a combination of the 2 systems. I am not sure if SOS goes into any of the calculations or if it is just a by product of the ranking system. Do you?

December 7th, 2010 at 8:40 PM ^

On top of playing a 9 game conference schedule, I suspect that the Pac 10 played the toughest non-conference schedule. Here's a listing of some of the quality opponents they played:

Wisconsin, Iowa, Boise St, TCU, Okl St, Tennessee, Nebraska, Nevada, Kansas St, Texas, and 2 teams played ND.

Obviously, they played their fair share of tomato cans too. By comparison, here's best competition the Big Ten played OOC:

Arizona St, Miami, Arizona, Missouri, Alabama, UConn, USC, 3 teams played ND.

Even with an extra out of conference game, and an extra team, the Big Ten still played fewer quality opponents than the Pac 10.

Not sure it's much of a feather in your cap to play a 7-5 Big Ten team vs a 6-6 Pac Ten team.

December 7th, 2010 at 9:04 PM ^

I'm not sure how losing to Wisconsin, Boise St., TCU, Okl St, Nebraska, Nevada, Kansas St, and going 1-1 with Notre Dame strengthens the conference. I agree it was tough for Oregon's opponents to play those teams, but they certainly did not win very many of those matchups (meaning Oregon's opponents did not gain strength...)

They gained strength by beating Iowa (who itself BEAT 3 winning teams,) gained no strength by beating Tennessee (who beat no team with a winning record, and is a terrible example of a "strong" team this year,) gained a little strength by beating a non-winning Texas (who at least beat 2 winning opponents,) and neither gained nor lost strength by beating Notre Dame (due to going 1-1 against the Irish.)

Simply playing tough games does not strengthen a conference for other conference members' SOS...

December 7th, 2010 at 9:11 PM ^

Don't the Sagarin Rankings and other SOS rankings factor in both opponent's records and opponent's opponent's records?

The RPI calculates SOS by taking 2/3 of the Opponent's Winning Percentage and 1/3 of the Opponent's opponent's winning percentage. So playing teams that play tough teams does actually help in many formulas.

December 7th, 2010 at 9:27 PM ^

Playing tough games does impact the expected win-loss record, however. Consider the example of Oregon State. Oregon State's record is 5-7, but 2 of those losses came against Boise State and TCU. Based on that result, Oregon State is not as good as either Boise State and TCU, but can you really say that Oregon State is worse than Louisville, whose record is 6-6? (Oregon State beat Louisville this year, by the way - that was OSU's 3rd non-conference game). Therein lies the flaw with only considering wins, and not taking the "quality" of the losses into account.

December 7th, 2010 at 9:53 PM ^

Oregon St. is a good example, they played THE hardest schedule in the country. I'll give them a pass even though both their offense and defense are pretty soft.

Look at ASU's schedule though -- 2 FCS teams and Washington St account for half of their wins. Sagarin say's they have the 6th hardest schedule in the country. Ok, sure... Having Oregon and Stanford on the schedule bouyes the whole conference's rank, deserved or not.

Nothing nefarious on Sagarin's part but the results of the formula are skewed.

December 7th, 2010 at 9:57 PM ^

I'd buy this argument in terms of Sagarin's flaws more than anything.

December 7th, 2010 at 9:39 PM ^

It is harder to beat quality opponents, which makes it more difficult to have a winning record, and beating teams with winning records is what much of your post was about. Thus, the relevance of my post pointing out that the Pac 10 played a tougher schedule than the Big 10, which made it more difficult for their teams to obtain a winning record.

December 7th, 2010 at 10:45 PM ^

I guess I'm thinking that a highly regarded strength of schedule suggests that the team which played said schedule differentiates themselves by playing the schedule. If all the team's opponents played difficult schedules but lost the games, I'm not sure how said team differentiates themselves from their opponents' opponents by beating a bunch of "also beatens." In other words, a team's strength of schedule is not based on the strength of schedule of each of their opponents; rather a strength of schedule is a reflection of the number of meaningful wins a team's opponents have concentrated among themselves.

December 8th, 2010 at 11:36 AM ^

Honestly, these SOS metrics are somewhat useless in college football when there are so few out of conference games between BCS teams. Consider that the current AP top 15 is undefeated against OOC BCS opponents. Only VTech has any losses OOC.

The fact that one algorithm (Sagarin) rates the Pac 10 SOS, and another very simplistic algorithm (RPI) hammers them shows you how little there is to determine the relative strength of the conferences, at least statistically.

December 7th, 2010 at 8:54 PM ^

I have no idea how he calculates his rankings, but when I run my ranking without weights or factoring in overall record, Oregon is 3rd. When I factor in MOV without overall record, they are 1st.

I think it is a factor of playing 9 teams that all play at least one other BCS school OOC.

December 7th, 2010 at 9:11 PM ^

... it's just the average rating of all teams played, adjusted for the home field advantage. So it's not really amenable to being fabricated. He'd have to change the rankings of the teams that go into the schedule calculation instead.

Since Sagarin thinks the Pac-10 is far stronger than the Big Ten, and since the Pac-10 plays 9 conference games, and since most of the Pac-10 teams schedule at least one decent non-conference opponent... it's not too surprising that he ranks all of the Pac-10 teams' schedules as being pretty strong. (Of the top 11 NCAA schedules according to Sagarin, 9 of them are Pac-10 teams.)

Ignoring the site adjustment, Michigan's schedule per Sagarin:

Three top-20 teams: 11 OSU, 15 Wisc, 20 MSU

Two "second 20" teams: 27 Iowa, 28 ND

Three 50-ish mediocre teams: 44 Illinois, 50 PSU, 54 UConn

Four cupcakes: 91 Purdue, 99 Indiana, 107 UMass, 158 Bowling Green

Oregon's divided similarly:

One top-20 team: 3 Stanford

Five "second 20" teams: 22 USC, 23 Ariz, 24 ASU, 31 OSU(ntOSU), 34 Cal

Three 50-ish mediocre teams: 42 Wash, 51 Tenn, 59 UCLA

Three cupcakes: 82 WSU, 168 New Mex, 184 Portland St.

Oregon's schedule isn't tons tougher than Michigan's (only 2 points on average) per Sagarin. The difference is one less cupcake and an extra 25-ish team, more or less. If you took Indiana off Michigan's schedule and turned it into a team like Texas A&M, that would pretty much make Michigan's schedule the equivalent of Oregon's per Sagarin.

The focus on "winning teams" strikes me as kind of odd. The Pac-10 has a handful of decent teams that ended up 6-6 or 5-7 -- for example, Arizona State who gave Wisconsin all they could handle. You credit Michigan with beating a "winning team" in UConn and another in Notre Dame, but I believe that both of those teams are not as good as ASU, for whom Oregon gets no "winning team" credit.

Going to a second level ("winning teams" by opponents) strikes me as even more contrived, as it suffers for the same reason: the Pac-10 having a lot of 0.500-ish teams, and those teams all playing a lot of games against each other.

December 7th, 2010 at 10:18 PM ^

Well, that explains the craziness. The Strength of Schedule is based on Sagarin's ratings... therefore a wacked out Strength of Schedule would just be a symptom of strange rankings.

Agree with you on ASU giving Wisconsin a run for their money (home-game for Wisconsin nonetheless.) But, I wouldn't be so quick to place ASU ahead of UConn and Notre Dame. Notre Dame and UConn each defeated 4 winning teams (meaning 50+% of their wins were meaningful) while ASU played two FCS opponents and defeated only one team with a winning record (meaning 17% of their wins were meaningful.) Coming close against Wisconsin does not erase the 3 game lead ND and UConn have on ASU; although that is only because I am of the opinion that a meaningful loss is not more important than a meaningful win.

December 7th, 2010 at 11:25 PM ^

Just for the sake of comparison, who do you think is the better team: Army or Texas? Army is 6-5 with nothing even resembling a quality win while Texas is 5-7 with a win over Nebraska. I would say that Texas is clearly the superior team, but according to your definition, Army is a quality win and Texas is not.

December 7th, 2010 at 11:52 PM ^

Army brings strength to the schedule on its own merit, due to having proven itself above average (if even only among its peers, and that is IF Army beats Navy this weekend...)

Texas would also bring strength to a schedule with its win over 2 winning teams...

Which brings more strength to a schedule? Well, I suppose that would be up to the writer of whichever formula... how Sagarin would rank them, I have no idea. I would give less emphasis to the second degree of separation from Texas' wins to UCLA, for example.

Due to the nature of schedules including 12 teams, arguments at the margin are outweighed by the fact that they lie within a spectrum of 132 games being weighed for any given team.

Oregon's FBS opponents played 121 games outside of Oregon: 12 of those were games in which they played a winning team and won (less than 10%.)

Michigan's FBS opponents also played 121 games outside of Michigan: 32 of those were games in which they played a winning team and won (more than 25%.)

Those are two vastly different levels of achievement, and they don't give Oregon the benefit of the doubt to cover the fact that Oregon only played 3 winning teams vs. Michigan playing 7 winning teams. Like I said, winning teams directly on the schedule were the first thing I considered. Then I assumed that Oregon's teams, although on average lost more games than they won, must have had some great wins to make up for the losses. 12 wins against winning teams does not outweigh 32. Therefore, the losing teams on Oregon's schedule are not somehow superior to the winning teams on Michigan's schedule.

December 8th, 2010 at 8:58 AM ^

There are obviously different ways to calculate SOS and I am no Mathlete so I cant really comment on Sagarin's method other than I doubt it is "rigged against TCU".

I will point out that if you went to the RPI ratings which I believe are a reference point for NCAA BBall tourney selection and also related to the PWR used in NCAA Hockey tourney selection that Oregon's schedule is 86th and Michigan's schedule is 16th.

I am a little concerned with the data as our conference record is 2-5. It seems as if they dont count the Illinois game as a win but as a tie.

On a positive note, the RPI predicts us to beat Miss St. by 5!

December 8th, 2010 at 9:11 AM ^

The RPI definitely gives a more realistic assessment (Granted, I am only basing that on Notre Dame's schedule being ranked 2nd... which makes sense if you take two seconds to look at their schedule; as well as the position of Michigan's, Mich St, and Oregon's schedules relative to each other.)

And yes, that blip of Michigan being given one less win and Illinois one less loss is a bit perplexing. Quickly glancing down the list, I didn't see anyone else who only had 11 games acknowledged... must be something regarding going beyond double OT?

December 13th, 2010 at 12:59 AM ^

Purely adding up records of opponents (as the RPI does) tells you little. Going 12-0 against a schedule with four 11-1 teams, four 6-6 teams, and four 1-11 teams is much harder than doing the same against 12 6-6 teams, but RPI says the strength of schedule is the same. (On the other hand, going 0-12 against the varied schedule would be much worse than doing so against 12 average teams. The latter says you can't beat average teams; the former says you can't even beat awful ones.) How bad your cupcakes are shouldn't matter much at all; the difference between facing a team good enough to beat you one out of three times and one that would win one out of four is probably ten times as significant as the difference between playing one you would beat 99% of the time and one you would beat 99.99% of the time.

For what it's worth, I've been running the Bradley-Terry method (KRACH) this year and it puts Oregon's SOS at #12 and TCU's at #39. For teams with high winning percentages, your toughest opponent or two are the largest factors (and at the opposite end, teams that lose a lot are defined largely by their weakest opponents) because the win probability for that game is by far the most sensitive to changes in your rating. Oregon's is Stanford, TCU's is Utah. That's a pretty big difference. Even if you look at the lower games (which are really less significant):

- USC v. Baylor - slight advantage Oregon

- Arizona v. Air Force - very slight advantage TCU

- Washington v. SDSU - about even

- Oregon St v. Oregon St - even

- Arizona St v. BYU - advantage Oregon

- Cal v. SMU - advantage Oregon

- Tennessee v. Colorado St - huge advantage Oregon

- UCLA v. Wyoming - advantage Oregon

- Wazzu v. UNLV - slight advantage Oregon (though at this point, does it really matter? both are going to get absolutely railed)

- 1-AA v. 1-AA - even

Part of the difficulty, admittedly, is the recursive sort of nature of these judgments. SDSU finished two games better than Washington, but did so against a vastly weaker schedule themselves, so they come out about even. (The bottom of the MWC is so bad that beating them counts for almost nothing; BYU was SDSU's fifth-toughest opponent and Washington's ninth-toughest. And Washington's weakest opponent, Wazzu, would be favored against half of SDSU's schedule.)

Comments